Introduction

In today’s data-driven world, efficiently moving data between different formats and storage systems is crucial. Parquet files, known for their efficient columnar storage format and compression capabilities, are widely used in big data analytics. SQLite, on the other hand, is a popular choice for embedded databases and local applications due to its serverless architecture and reliability.

However, transferring data from Parquet files to SQLite traditionally involves complex processes:

- Writing custom code to handle Parquet file reading

- Managing data type conversions

- Implementing efficient bulk loading mechanisms

- Dealing with schema differences

- Handling large datasets with limited memory

Enter Sling: a modern data movement tool that simplifies this process dramatically. Sling provides an intuitive interface for transferring data between various sources and destinations, including Parquet files and SQLite databases. Key advantages include:

- Automated schema mapping and creation

- Efficient bulk loading capabilities

- Built-in data type conversion

- Memory-efficient processing

- Simple command-line interface

- Flexible configuration options

In this guide, we’ll walk through the process of using Sling to efficiently migrate data from Parquet files to SQLite databases. Whether you’re working with small datasets or large-scale data migrations, you’ll learn how to leverage Sling’s features to streamline your data pipeline.

Installation

Getting started with Sling is straightforward. The tool can be installed on various operating systems using different package managers. Let’s go through the installation process step by step.

System Requirements

Before installing Sling, ensure your system meets these basic requirements:

- Operating System: Linux, macOS, or Windows

- Disk Space: Minimum 100MB for installation

- Memory: Minimum 512MB RAM (recommended 1GB+ for larger datasets)

- Internet connection for installation and updates

Installing Sling

Choose the installation method that best suits your operating system:

# Install using Homebrew (macOS)

brew install slingdata-io/sling/sling

# Install using curl (Linux)

curl -LO 'https://github.com/slingdata-io/sling-cli/releases/latest/download/sling_linux_amd64.tar.gz' \

&& tar xf sling_linux_amd64.tar.gz \

&& rm -f sling_linux_amd64.tar.gz \

&& chmod +x sling

# Install using Scoop (Windows)

scoop bucket add sling https://github.com/slingdata-io/scoop-sling.git

scoop install sling

# Install using Python pip

pip install sling

After installation, verify that Sling is properly installed by checking its version:

# Check Sling version

sling --version

For more detailed installation instructions and system-specific requirements, visit the Sling CLI Getting Started Guide.

Environment Setup

Sling uses a configuration directory to store connection details and other settings. The configuration directory is typically located at:

- Linux/macOS:

~/.sling/ - Windows:

C:\Users\<username>\.sling\

The first time you run Sling, it will automatically create this directory and a default configuration file. You can also specify a custom location using the SLING_HOME_DIR environment variable.

Setting Up Connections

Before we can start moving data, we need to configure our source (local Parquet files) and target (SQLite) connections. Sling provides multiple ways to manage these connections securely.

Local Storage Connection

For local Parquet files, Sling automatically configures a default connection named LOCAL. You don’t need any additional configuration for accessing local files. The LOCAL connection allows you to read files from your local filesystem using paths prefixed with file://.

SQLite Connection Setup

For SQLite, you have several options to set up the connection. Here are the different methods:

Using Environment Variables

The simplest way to set up a SQLite connection is through environment variables:

# Set up SQLite connection using environment variable

export SQLITE='sqlite:///path/to/database.db'

Using the Sling CLI

A more secure and maintainable approach is to use Sling’s connection management commands:

# Set up SQLite connection using sling conns set

sling conns set sqlite_db type=sqlite database=/path/to/database.db

Using YAML Configuration

For a more permanent setup, you can define your connections in the ~/.sling/env.yaml file:

connections:

sqlite_db:

type: sqlite

database: /path/to/database.db

Testing Connections

After setting up your connections, it’s important to verify they work correctly:

# Test SQLite connection

sling conns test sqlite_db

# Test local connection

sling conns test local

Connection Management Best Practices

Security

- Store sensitive credentials in environment variables

- Use

.envfiles for local development - Never commit credentials to version control

Naming Conventions

- Use descriptive connection names

- Follow a consistent naming pattern

- Include environment indicators when needed

Configuration

- Keep connection configurations in version control (without credentials)

- Document connection requirements

- Use relative paths when possible

For more information about connection management and environment variables, refer to the Sling CLI Environment documentation.

Using CLI Flags for Data Sync

Sling’s command-line interface provides a quick way to transfer data using CLI flags. This approach is perfect for one-off transfers or when you want to test your data pipeline before creating a more permanent configuration.

Basic Example

Here’s a simple example of loading a Parquet file into a SQLite table:

# Load a Parquet file into a SQLite table

sling run \

--src-conn local \

--src-stream "file://data/sales.parquet" \

--tgt-conn sqlite_db \

--tgt-object "sales"

This command:

- Uses the

localconnection to read the Parquet file - Specifies the source file path with

file://prefix - Uses our configured SQLite connection

- Creates or updates the

salestable in SQLite

Advanced Example with Options

For more control over the data transfer process, you can use additional CLI flags:

# Advanced Parquet to SQLite transfer with options

sling run \

--src-conn local \

--src-stream "file://data/sales.parquet" \

--src-options '{ "empty_as_null": true }' \

--tgt-conn sqlite_db \

--tgt-object "sales" \

--tgt-options '{ "column_casing": "snake", "table_keys": { "primary": ["id"] } }' \

--mode incremental \

--update-key "updated_at"

This advanced example includes:

- Source options for handling empty values

- Target options for column naming and primary key

- Incremental mode with an update key

- Automatic schema creation and data type mapping

Common CLI Flags

Here are some useful CLI flags for Parquet to SQLite transfers:

Source Options

empty_as_null: Convert empty strings to NULLdatetime_format: Specify datetime format for parsingflatten: Flatten nested Parquet structures

Target Options

column_casing: Control column name casing (snake, lower, upper)table_keys: Define primary and unique keysadd_new_columns: Automatically add new columnstable_ddl: Custom DDL for table creation

Mode Options

mode: full-refresh, incremental, truncateupdate-key: Column for incremental updatesprimary-key: Column(s) for record identification

For a complete list of available CLI flags and options, visit the CLI Flags Overview.

Using Replication YAML

While CLI flags are great for quick transfers, replication YAML files provide a more maintainable and version-controlled way to define your data pipelines. Let’s explore how to use YAML configurations for Parquet to SQLite transfers.

Basic Replication Example

Here’s a simple replication YAML file that loads a single Parquet file into SQLite:

# basic_replication.yaml

source: local

target: sqlite_db

streams:

file://data/sales.parquet:

object: sales

mode: full-refresh

source_options:

empty_as_null: true

target_options:

column_casing: snake

To run this replication:

# Run the replication

sling run -r basic_replication.yaml

Advanced Replication with Multiple Streams

Here’s a more complex example that handles multiple Parquet files with different configurations:

# advanced_replication.yaml

source: local

target: sqlite_db

defaults:

mode: incremental

source_options:

empty_as_null: true

target_options:

column_casing: snake

add_new_columns: true

streams:

file://{data_dir}/sales_*.parquet:

object: sales

update_key: updated_at

primary_key: [id]

target_options:

table_keys:

primary: [id]

unique: [order_number]

file://{data_dir}/customers.parquet:

object: customers

mode: full-refresh

transforms:

email: lower

status: trim

target_options:

table_keys:

primary: [customer_id]

batch_size: '{batch_size}'

env:

data_dir: /path/to/data

batch_size: 10000

This advanced configuration includes:

- Multiple stream definitions

- Default options for all streams

- Stream-specific configurations

- Data transformations

- Environment variables

- Table key definitions

Using Runtime Variables

Sling supports runtime variables in replication YAML files. These are useful for dynamic file paths and table names:

# dynamic_replication.yaml

source: local

target: sqlite_db

streams:

"file://myfile.parquet":

object: "{stream_file_name}"

mode: full-refresh

Replication YAML Best Practices

Organization

- Use descriptive stream names

- Group related streams together

- Leverage defaults for common settings

Configuration

- Use environment variables for paths and credentials

- Include comments for complex configurations

- Version control your YAML files

Maintenance

- Keep configurations modular

- Document any special handling

- Use consistent naming conventions

For more details about replication configuration and available options, refer to:

Sling Platform Overview

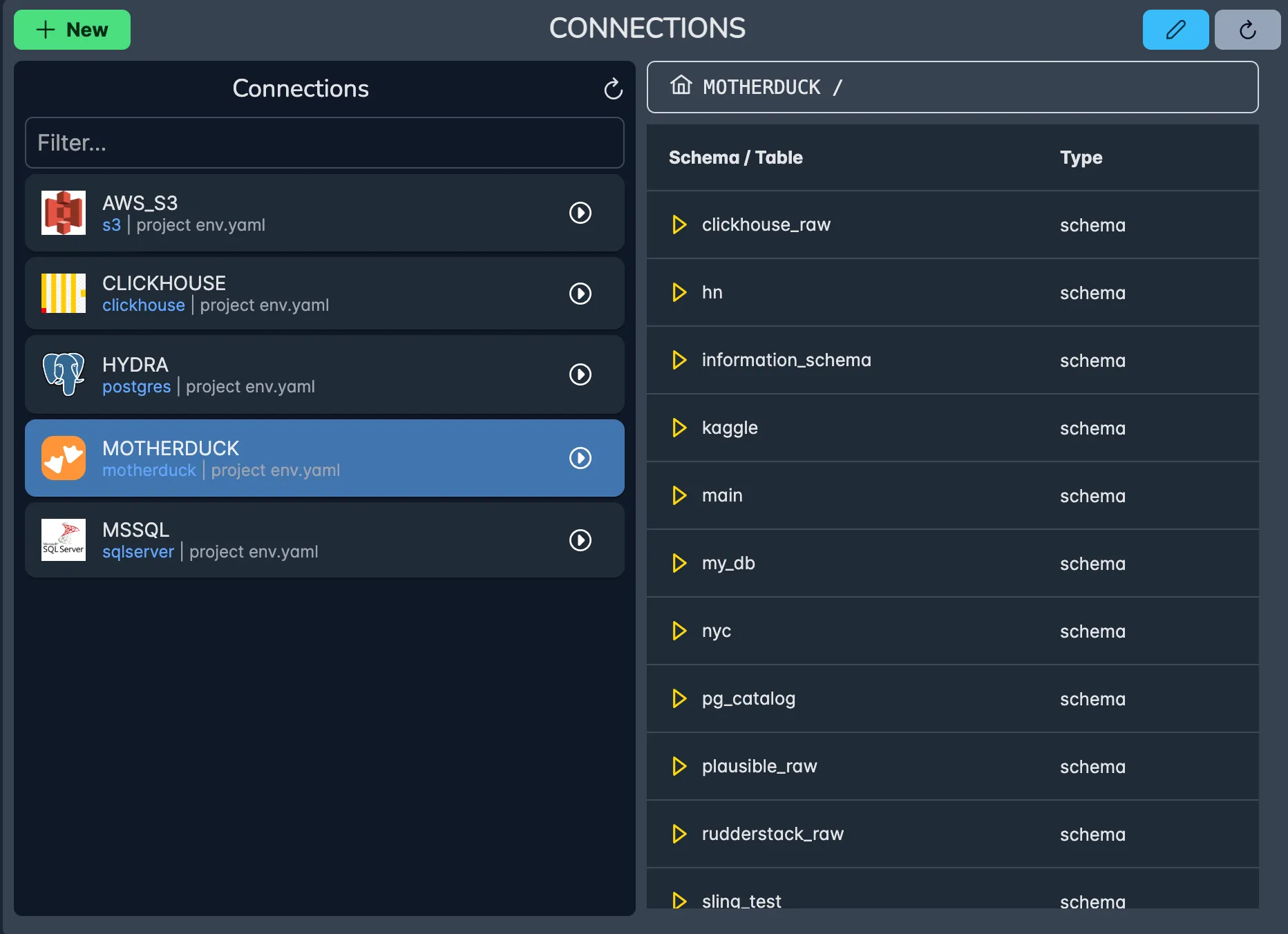

While the CLI is powerful for local development and automation, the Sling Platform provides a comprehensive web-based interface for managing and monitoring your data operations at scale. Let’s explore what the platform offers for Parquet to SQLite migrations.

Key Features

The Sling Platform extends the CLI’s capabilities with:

Visual Interface

- Drag-and-drop replication builder

- Real-time monitoring dashboard

- Visual data preview and profiling

- Connection management UI

Team Collaboration

- Role-based access control

- Shared connection management

- Team activity monitoring

- Collaborative troubleshooting

Advanced Monitoring

- Real-time pipeline status

- Detailed execution logs

- Performance metrics

- Error tracking and alerts

Getting Started with the Platform

To begin using the Sling Platform:

- Sign up at app.slingdata.io

- Create your organization

- Install and configure a Sling Agent

- Set up your connections

- Create your first replication

Platform Components

Sling Agents

Agents are the workers that execute your data operations:

- Run in your own infrastructure

- Secure access to your data sources

- Support for both development and production environments

- Automatic updates and health monitoring

Connection Management

The platform provides a secure way to manage connections:

- Centralized credential management

- Connection health monitoring

- Easy testing and validation

- Support for multiple environments

Replication Builder

The visual replication builder makes it easy to:

- Design data pipelines

- Configure transformations

- Set up scheduling

- Monitor execution

For more information about the Sling Platform and its features, visit the Platform Getting Started Guide.

Best Practices and Next Steps

Let’s wrap up with some best practices for using Sling in your Parquet to SQLite data pipelines, along with suggestions for next steps.

Performance Optimization

Batch Size Management

- Adjust batch sizes based on your data volume

- Monitor memory usage during transfers

- Use appropriate compression settings

Resource Utilization

- Schedule large transfers during off-peak hours

- Monitor disk space on both ends

- Consider network bandwidth limitations

Data Type Handling

- Use appropriate data types in SQLite

- Handle NULL values consistently

- Consider column precision requirements

Common Use Cases

Sling’s Parquet to SQLite capabilities are particularly useful for:

Local Analytics

- Converting big data exports for local analysis

- Creating portable databases from data lake exports

- Building offline-capable applications

Development and Testing

- Creating test databases from production data samples

- Prototyping data models

- Quick data exploration

Data Distribution

- Packaging data for mobile applications

- Creating embedded databases

- Distributing reference data

Additional Resources

To learn more about Sling and its capabilities:

Documentation

Examples and Tutorials

Connection Guides

Conclusion

Sling simplifies the process of transferring data from Parquet files to SQLite databases, offering both command-line and platform-based solutions. Whether you’re working on a small local project or managing enterprise-scale data operations, Sling provides the tools and flexibility you need.

By following the practices and examples in this guide, you can:

- Set up efficient data pipelines

- Automate your data transfers

- Maintain data integrity

- Scale your operations as needed

Remember that Sling is continuously evolving, with new features and improvements being added regularly. Stay updated with the latest developments by following the Sling documentation.