Export PostgreSQL Data to BigQuery Using Sling

Moving data from PostgreSQL to Google BigQuery is a common requirement in modern data architectures. Whether you’re consolidating data warehouses, setting up analytics pipelines, or migrating databases, having a reliable and efficient tool is crucial. Sling provides a streamlined solution for this exact scenario.

Sling is a modern data movement and transformation platform that makes it simple to transfer data between different systems. With its powerful CLI tool and intuitive platform interface, you can set up PostgreSQL to BigQuery replications in minutes, not hours.

Key features that make Sling perfect for Postgres to BigQuery transfers:

- Simple CLI and UI interfaces

- Support for various replication modes

- Automatic schema mapping

- Built-in data type conversions

- Progress monitoring and logging

- Scalable performance

Installation

Getting started with Sling is straightforward. You can install the CLI tool using various package managers:

# Install using Homebrew (macOS)

brew install slingdata-io/sling/sling

# Install using curl (Linux)

curl -LO 'https://github.com/slingdata-io/sling-cli/releases/latest/download/sling_linux_amd64.tar.gz' \

&& tar xf sling_linux_amd64.tar.gz \

&& rm -f sling_linux_amd64.tar.gz \

&& chmod +x sling

# Install using Scoop (Windows)

scoop bucket add sling https://github.com/slingdata-io/scoop-sling.git

scoop install sling

# Install using Python pip

pip install sling

After installation, verify that Sling is properly installed by running:

# Check Sling version

sling --version

The CLI tool provides all the functionality needed for database migrations, while the Sling Platform offers a web interface for team collaboration and monitoring. You can sign up for the platform at slingdata.io.

Connection Setup

Before we can start transferring data, we need to set up connections to both PostgreSQL and BigQuery. Let’s configure these connections step by step.

PostgreSQL Connection

You can set up a PostgreSQL connection using either the CLI or the platform interface. Here’s how to do it using the CLI:

# Set up PostgreSQL connection using connection string

sling conns set postgres_source url="postgresql://user:pass@host:5432/dbname?sslmode=require"

# Or set up using individual parameters

sling conns set postgres_source type=postgres host=host.example.com user=myuser password=mypass database=mydb port=5432

BigQuery Connection

For BigQuery, you’ll need a Google Cloud service account with appropriate permissions. Here’s how to set up the connection:

# Set up BigQuery connection with service account key file

sling conns set bigquery_target type=bigquery project=my-project dataset=my_dataset gc_bucket=my-bucket key_file=/path/to/service-account.json

# Or set up using service account JSON content in environment variable

export GC_KEY_BODY='{"type": "service_account", ...}'

sling conns set bigquery_target type=bigquery project=my-project dataset=my_dataset gc_bucket=my-bucket

Testing Connections

Once you’ve set up both connections, it’s important to test them to ensure they’re working correctly:

# Test PostgreSQL connection

sling conns test postgres_source

# Test BigQuery connection

sling conns test bigquery_target

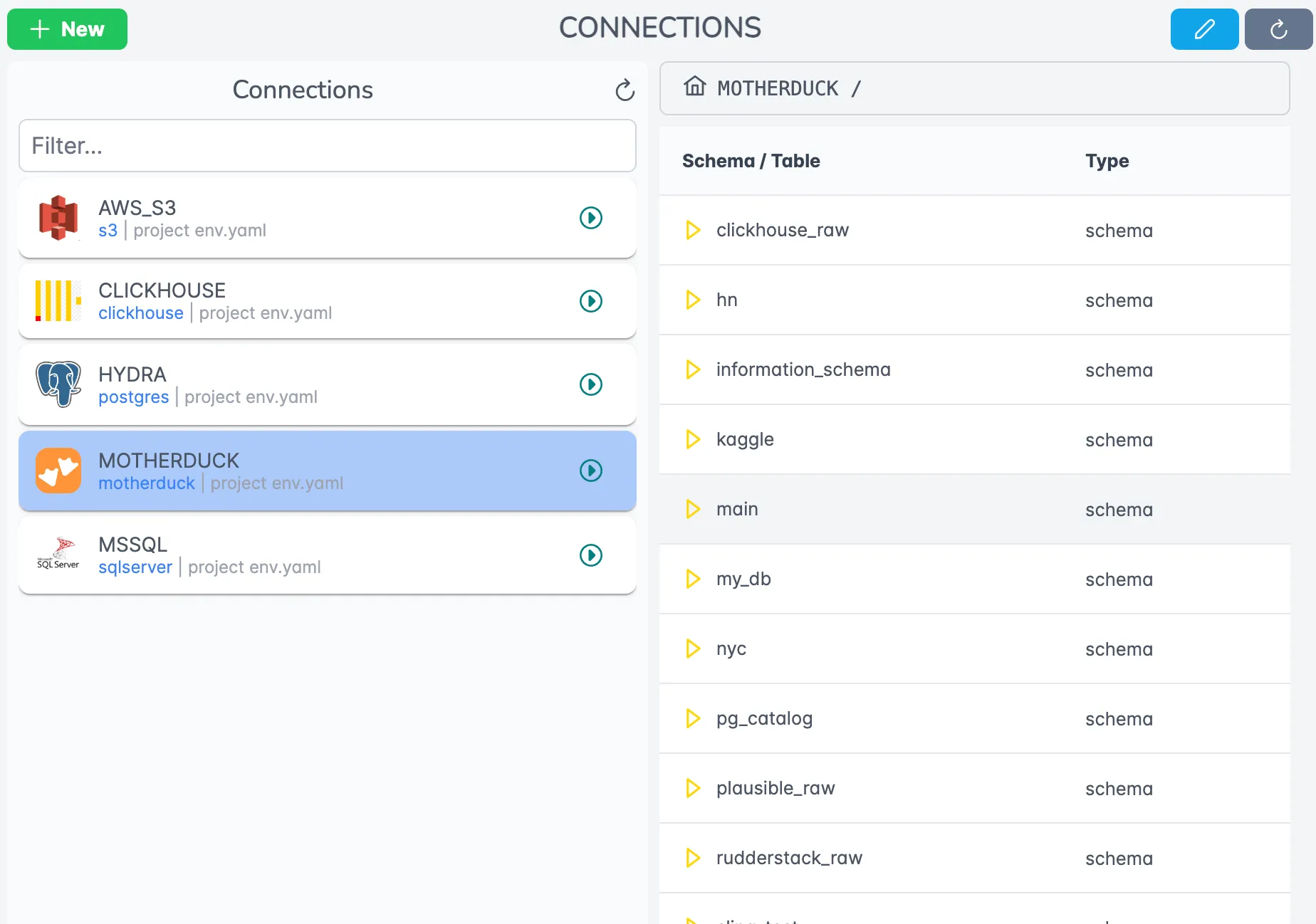

Here’s how connections appear in the Sling Platform interface:

Data Replication Examples

Let’s look at different ways to replicate data from PostgreSQL to BigQuery using Sling. We’ll cover both CLI commands and YAML configurations.

Basic CLI Commands

The simplest way to start a replication is using the CLI command:

# Replicate a single table with full refresh

sling run --src-conn postgres_source --src-stream "public.users" --tgt-conn bigquery_target --tgt-object "users" --mode full-refresh

# Replicate with specific columns

sling run --src-conn postgres_source --src-stream "public.users" --tgt-conn bigquery_target --tgt-object "users" --select "id,name,email,created_at"

YAML Configuration Examples

For more complex replications, YAML configurations provide better maintainability and version control. Here are some examples:

# using wildcards and stream variables

source: postgres_source

target: bigquery_target

defaults:

mode: full-refresh

streams:

'public.*':

object: 'public.{stream_table}'

# using defaults and specific streams

source: postgres_source

target: bigquery_target

defaults:

mode: full-refresh

object: "public.{stream_table}"

streams:

# use defaults for all streams

public.users:

public.orders:

primary_key: [order_id]

public.products:

# incremental

source: postgres_source

target: bigquery_target

streams:

public.orders:

update_key: updated_at

primary_key: [order_id]

To run these YAML configurations:

# Run the replication

sling run -r postgres-bigquery.yaml

Replication Modes

Sling supports different replication modes to suit your needs:

- Full Refresh Mode:

mode: full-refresh

This mode completely replaces the target table with source data.

- Incremental Mode:

mode: incremental

primary_key: [id]

update_key: updated_at

This mode only updates changed records based on a primary key.

- Snapshot Mode:

mode: snapshot

This mode adds new records without modifying existing ones. See the Replication docs for more details.

Conclusion

Sling provides a powerful and flexible solution for moving data from PostgreSQL to BigQuery. Its combination of simple CLI commands and comprehensive YAML configurations makes it suitable for both quick migrations and complex data pipelines.

Key takeaways from this guide:

- Sling offers both CLI and Platform interfaces for managing data transfers

- Multiple replication modes support different use cases

- YAML configurations provide version-controlled, repeatable replications

- Built-in features like data type conversion and transformation simplify the process

To learn more about Sling’s capabilities:

- Visit the documentation

- Join our Discord community

- Follow us on GitHub

Start using Sling today to streamline your PostgreSQL to BigQuery data movement workflows.