The Challenge of Snowflake to BigQuery Data Migration

Moving data between cloud data warehouses like Snowflake and BigQuery traditionally involves complex ETL processes, custom scripts, and significant engineering effort. Common challenges include:

- Setting up and maintaining data extraction processes from Snowflake

- Managing data type compatibility between platforms

- Implementing efficient data loading into BigQuery

- Monitoring and maintaining the data pipeline

- Handling incremental updates and schema changes

Sling simplifies this entire process by providing a streamlined, configuration-based approach that eliminates the need for custom code and complex infrastructure setup.

Installing Sling

Getting started with Sling is straightforward. You can install the CLI tool using various package managers:

# Install using Homebrew (macOS)

brew install slingdata-io/sling/sling

# Install using curl (Linux)

curl -LO 'https://github.com/slingdata-io/sling-cli/releases/latest/download/sling_linux_amd64.tar.gz' \

&& tar xf sling_linux_amd64.tar.gz \

&& rm -f sling_linux_amd64.tar.gz \

&& chmod +x sling

# Install using Scoop (Windows)

scoop bucket add sling https://github.com/slingdata-io/scoop-sling.git

scoop install sling

# Install using Python pip

pip install sling

For more detailed installation instructions, visit the official documentation.

Setting Up Connections

Before we can start replicating data, we need to configure our Snowflake and BigQuery connections. Sling makes this process simple with its connection management system.

First, let’s set up our Snowflake connection:

# Set up Snowflake connection

export SNOWFLAKE_SOURCE="snowflake://${SNOWFLAKE_USER}:${SNOWFLAKE_PASSWORD}@${SNOWFLAKE_ACCOUNT}/${SNOWFLAKE_DATABASE}?warehouse=${SNOWFLAKE_WAREHOUSE}&role=${SNOWFLAKE_ROLE}"

# we should be able to test our connection now

sling conns test snowflake_source

Next, let’s configure the BigQuery connection:

# Set up BigQuery connection

sling conns set bigquery_target type=bigquery project=<project> dataset=<dataset> key_file=/path/to/service.account.json

# we should be able to test our connection now

sling conns test bigquery_target

Creating a Snowflake to BigQuery Replication

Now that our connections are set up, we can create a replication configuration. Create a file named snowflake_to_bigquery.yaml with the following content:

# Define source and target connections

source: snowflake_source

target: bigquery_target

# Set default options for all streams

defaults:

mode: full-refresh

# Define the tables to replicate

streams:

# Replicate a single table

"SALES.ORDERS":

object: "sales_dataset.orders"

primary_key: ["order_id"]

# Replicate multiple tables using wildcards

"SALES.*":

object: "sales_dataset.{stream_table}"

mode: incremental

update_key: "last_modified_at"

target_options:

# Use BigQuery's bulk loading for better performance

use_bulk: true

For more detailed configuration options, refer to the replication documentation.

Running the Replication

With our configuration in place, we can now run the replication using the Sling CLI:

# Run the replication

sling run -r snowflake_to_bigquery.yaml

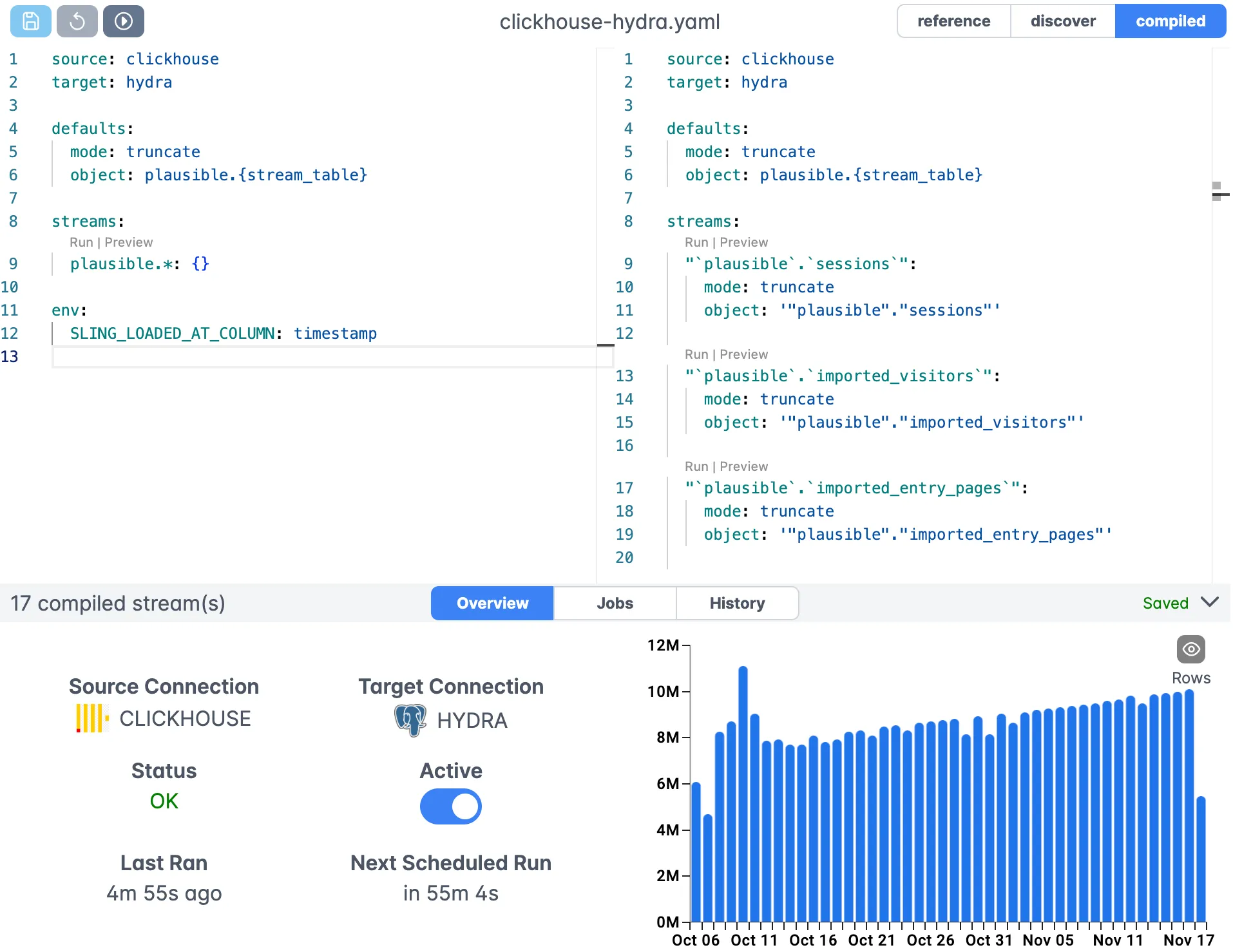

The Sling Platform

While the CLI provides powerful functionality for data replication, the Sling Platform offers a comprehensive UI-based solution for managing your data pipelines at scale.

The platform provides:

- Visual replication configuration

- Real-time monitoring and logging

- Team collaboration features

- Scheduled executions

- Agent management for distributed workloads

Best Practices and Tips

To get the most out of your Snowflake to BigQuery replications:

- Use incremental mode for large tables that update frequently

- Implement appropriate primary keys for data integrity

- Leverage bulk loading for better performance

- Monitor replication logs regularly

- Use runtime variables for flexible configurations

Next Steps

To learn more about Sling’s capabilities:

- Explore database-to-database examples

- Read about replication modes

- Learn about runtime variables

- Check out the Sling Platform documentation