Introduction

In today’s data-driven world, efficiently moving data between different systems is crucial for analytics, reporting, and data warehousing. One common requirement is transferring data from PostgreSQL databases to Parquet files, a columnar storage format that offers excellent compression and query performance. However, setting up this data pipeline traditionally involves multiple tools, complex configurations, and significant maintenance overhead.

Enter Sling, an open-source data movement tool that simplifies this process dramatically. In this guide, we’ll explore how to use Sling to efficiently transfer data from PostgreSQL to Parquet files, making your data pipeline both powerful and maintainable.

Traditional Data Pipeline Challenges

When building a data pipeline to transfer PostgreSQL data to Parquet files, organizations often face several challenges:

- Complex setup requiring multiple tools and dependencies

- Performance bottlenecks when handling large datasets

- Difficulty in maintaining schema consistency

- Resource-intensive ETL processes

- Lack of monitoring and error handling

- High maintenance overhead

These challenges often lead to brittle pipelines that are difficult to maintain and scale. Let’s see how Sling addresses these issues.

Enter Sling: A Modern Solution

Sling is a modern data movement platform designed to simplify data operations. It provides both a powerful CLI tool and a comprehensive platform for managing data workflows between various sources and destinations.

Key Features

- Efficient Data Transfer: Optimized for performance with built-in parallelization

- Simple Configuration: Easy-to-use CLI and YAML-based configuration

- Schema Handling: Automatic schema detection and evolution

- Monitoring: Built-in progress tracking and error reporting

- Flexibility: Support for multiple replication modes and data formats

Platform Components

The Sling platform consists of several key components:

- Sling CLI: A powerful command-line tool for data operations

- Sling Platform: A web-based interface for managing connections and monitoring jobs

- Sling Agents: Worker processes that execute data operations in your infrastructure

- Connection Management: Secure credential storage and connection handling

Let’s dive into how to get started with Sling for your PostgreSQL to Parquet data transfer needs.

Getting Started with Sling

The first step is to install Sling on your system. Sling provides multiple installation methods to suit your environment:

Installation

# Install using Homebrew (macOS)

brew install slingdata-io/sling/sling

# Install using curl (Linux)

curl -LO 'https://github.com/slingdata-io/sling-cli/releases/latest/download/sling_linux_amd64.tar.gz' \

&& tar xf sling_linux_amd64.tar.gz \

&& rm -f sling_linux_amd64.tar.gz \

&& chmod +x sling

# Install using Scoop (Windows)

scoop bucket add sling https://github.com/slingdata-io/scoop-sling.git

scoop install sling

# Install using Python pip

pip install sling

After installation, verify that Sling is properly installed by checking its version:

# Check Sling version

sling --version

For more detailed installation instructions, visit the Sling CLI Getting Started Guide.

Setting Up Connections

Now that Sling is installed, let’s set up the necessary connections for our PostgreSQL to Parquet transfer. Sling manages connections through its environment system, which supports multiple ways to store credentials securely.

PostgreSQL Connection Setup

You can set up a PostgreSQL connection using any of these methods:

Using sling conns set Command

# Set up PostgreSQL connection using individual parameters

sling conns set POSTGRES type=postgres host=localhost user=myuser database=mydb password=mypassword port=5432

# Or use a connection URL

sling conns set POSTGRES url="postgresql://myuser:mypassword@localhost:5432/mydb"

Using Environment Variables

# Set PostgreSQL connection using environment variable

export POSTGRES='postgresql://myuser:mypassword@localhost:5432/mydb'

Using Sling Environment File

Create or edit ~/.sling/env.yaml:

connections:

POSTGRES:

type: postgres

host: localhost

user: myuser

password: mypassword

port: 5432

database: mydb

schema: public # optional

Local Storage Setup

For Parquet file output, you’ll need a local storage connection. This is simpler as it just requires specifying a local directory:

connections:

LOCAL:

type: local

Verifying Connections

After setting up your connections, verify them using the sling conns commands:

# List all configured connections

sling conns list

# Test PostgreSQL connection

sling conns test POSTGRES

# Discover available tables in PostgreSQL

sling conns discover POSTGRES

For more details about connection configuration, refer to the Sling Environment Documentation.

Basic Data Transfer with CLI Flags

The simplest way to transfer data from PostgreSQL to Parquet files is using Sling’s CLI flags. This method is perfect for quick transfers and testing.

Simple Transfer Example

# Transfer a single table to a Parquet file

sling run \

--src-conn POSTGRES \

--src-stream "public.users" \

--tgt-conn LOCAL \

--tgt-object "file://./data/users.parquet"

Advanced CLI Options

# Transfer with custom SQL query and options

sling run \

--src-conn POSTGRES \

--src-stream "SELECT id, name, email, created_at FROM users WHERE created_at > '2023-01-01'" \

--tgt-conn LOCAL \

--tgt-object "file://./data/recent_users.parquet" \

--tgt-opts "file_max_bytes=100000000,compression=snappy"

For more details about CLI flags, visit the CLI Flags Documentation.

Advanced Data Transfer with Replication YAML

For more complex data transfer scenarios, Sling supports YAML-based replication configurations. This approach offers more control and is better suited for production environments.

Basic Replication Example

Create a file named postgres_to_parquet.yaml:

source: POSTGRES

target: LOCAL

defaults:

mode: full-refresh

target_options:

format: parquet

compression: snappy

file_max_bytes: 100000000

streams:

# Export all tables in schema 'private'

private.*:

object: 'file:///data/private/{stream_table}.json'

mode: full-refresh

public.users:

object: file:///data/users/users.parquet

columns:

id: int

name: string

email: string

created_at: timestamp

public.orders:

object: file:///data/orders/orders.parquet

primary_key: [order_id]

select:

- order_id

- user_id

- total_amount

- status

- created_at

Advanced Replication Example

Here’s a more complex example with multiple streams and custom options:

source: POSTGRES

target: LOCAL

defaults:

mode: incremental

target_options:

format: parquet

compression: snappy

file_max_bytes: 500000000

streams:

# Custom SQL query with specific columns

analytics.daily_metrics:

sql: |

SELECT

date,

product_id,

SUM(revenue) as daily_revenue,

COUNT(DISTINCT user_id) as unique_users

FROM raw_events

WHERE date >= '2023-01-01'

GROUP BY date, product_id

object: file:///data/metrics/daily_metrics.parquet

primary_key: [date, product_id]

# Incremental load with update key

public.transactions:

object: file:///data/transactions/{date}/transactions.parquet

mode: incremental

primary_key: [transaction_id]

update_key: updated_at

source_options:

empty_as_null: true

target_options:

file_max_bytes: 100000000

# Multiple tables using wildcard

public.user_*:

object: file:///data/user_data/{stream_name}.parquet

select:

- id

- created_at

- updated_at

- metadata

source_options:

flatten: true

env:

SLING_LOADED_AT_COLUMN: true

SLING_STREAM_URL_COLUMN: true

To run a replication configuration:

# Run the replication

sling run -r postgres_to_parquet.yaml

For more details about replication configuration, see the Replication Documentation.

Using the Sling Platform UI

While the CLI is powerful for automation and scripting, Sling also provides a web-based platform for managing and monitoring data transfers visually.

Platform Features

- Visual Connection Management: Create and manage connections through an intuitive interface

- Job Monitoring: Track the progress and status of your data transfers

- Scheduling: Set up recurring transfers with built-in scheduling

- Error Handling: Visual error tracking and debugging

- Team Collaboration: Share connections and configurations with team members

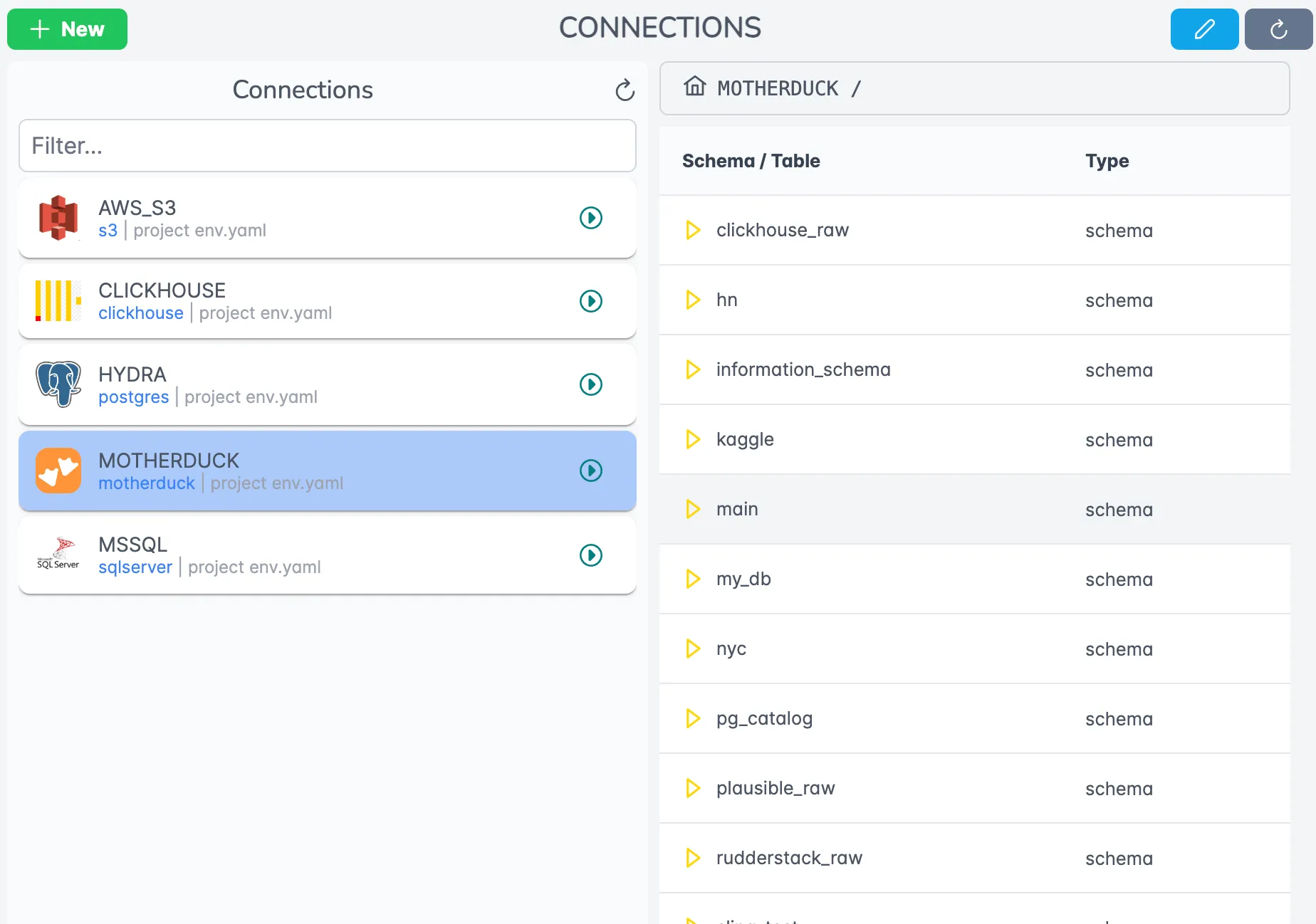

Connection Management

The Sling Platform provides an intuitive interface for managing your connections:

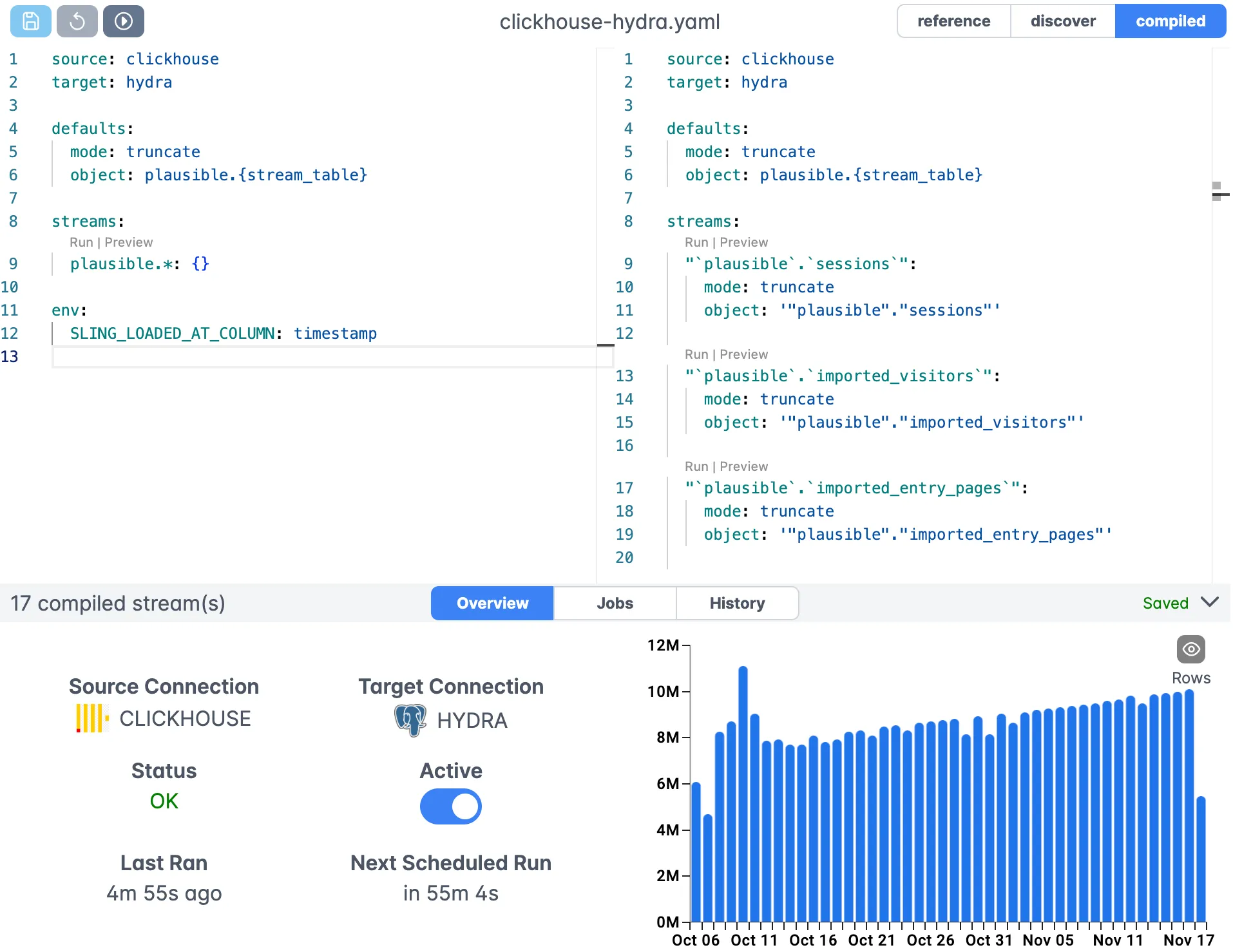

Replication Editor

Create and edit your replication configurations with the visual editor:

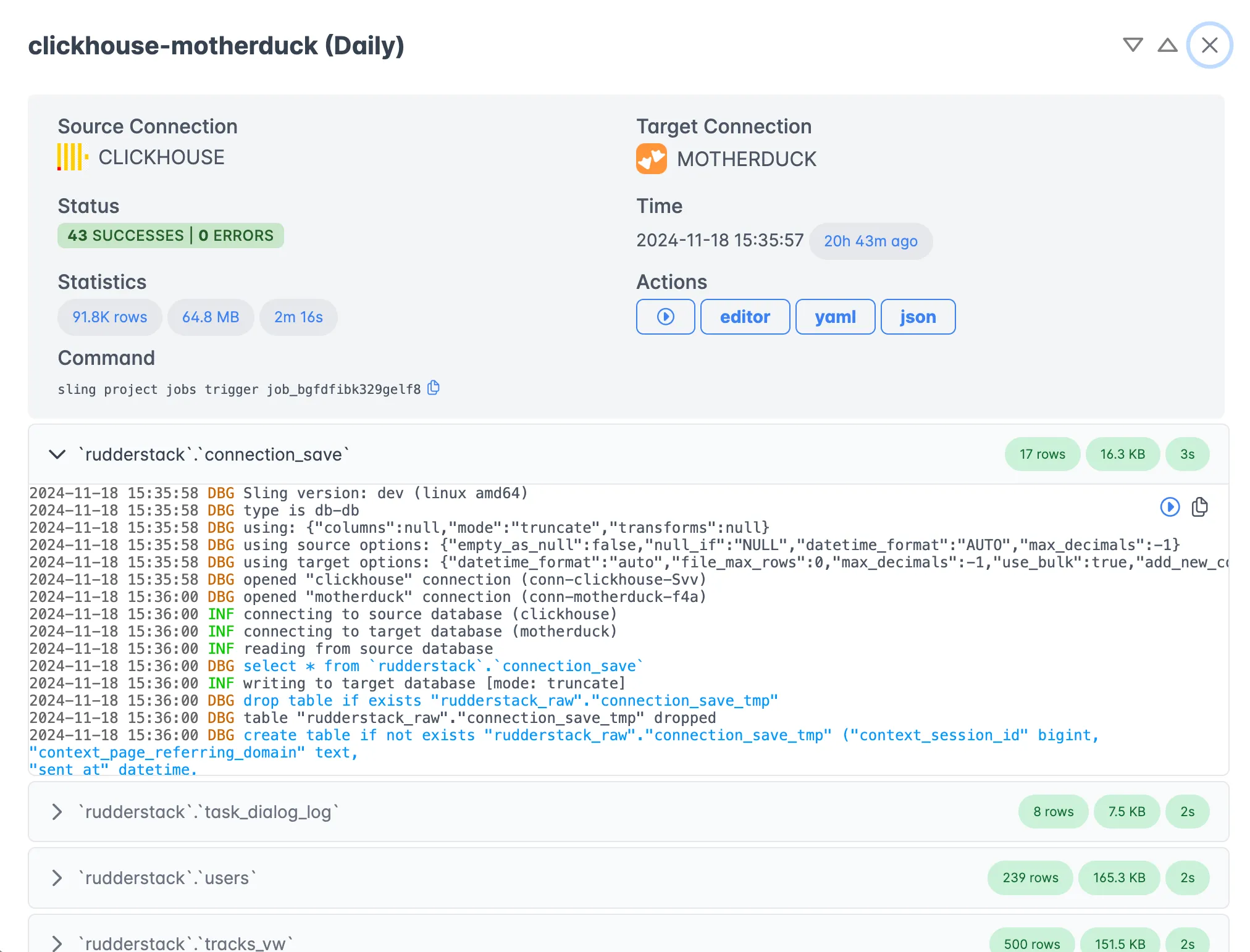

Job Monitoring

Monitor your data transfers in real-time with detailed execution information:

Getting Started with the Platform

- Sign up for a Sling account at platform.slingdata.io

- Create your connections in the web interface

- Set up your first replication job

- Monitor the transfer progress in real-time

For more information about the Sling Platform, visit the Platform Documentation.

Best Practices and Optimization

To get the most out of your PostgreSQL to Parquet transfers with Sling, consider these best practices:

- Use Incremental Mode: For large tables that update frequently, use incremental mode with an appropriate update key

- Optimize File Sizes: Configure

file_max_bytesbased on your downstream processing requirements - Choose Compression: Use

snappycompression for a good balance of speed and compression ratio - Leverage Parallelization: Sling automatically parallelizes operations when possible

- Monitor Memory Usage: Adjust batch sizes for optimal performance

- Regular Testing: Use the

sling conns testcommand to verify connections regularly

Conclusion

Sling provides a powerful and flexible solution for transferring data from PostgreSQL to Parquet files. Whether you prefer the simplicity of CLI commands or the convenience of the web platform, Sling offers the tools you need to build robust data pipelines.

For more examples and detailed documentation, visit:

Join the Sling community: